Secure Your Software Supply Chain

The question if FLOSS (Free/Libre and Open-Source Software) is more or less secure than proprietary software is often not the right question to ask. The much more important question is: How to integrate FLOSS components securely into a Secure Software Development Process? Moreover, if you think about it, the potential challenges in the secure integration of FLOSS components are also challenges integrating other types of third-party components. As a software vendor you are finally responsible for the security of the overall product, regardless which technologies and components where used in building it (you can either read more, or watch the video of our AppSecEU presentation).

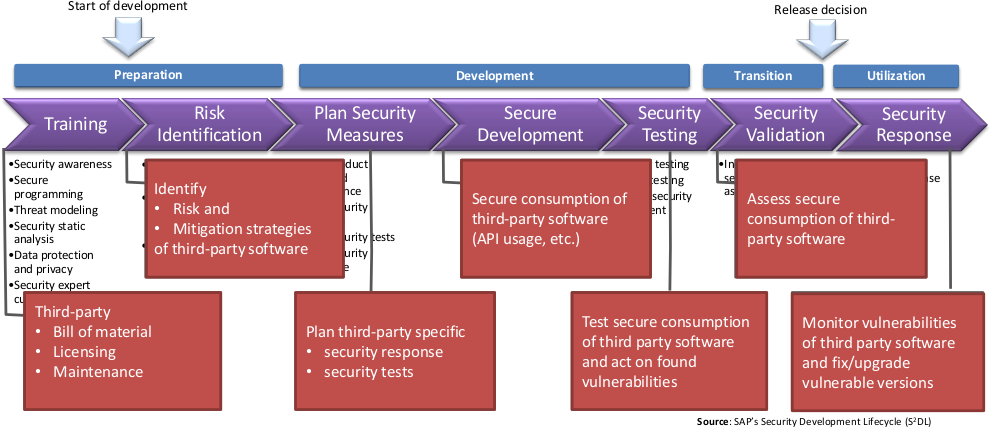

Ideally, third party components should, security-wise, be treated as your own code and, thus, they impact the all aspects of the Secure Software Development Lifecycle.

Before we continue, let;s quickly review the three most important types of third party components:

- Commercial libraries, outsourcing, and bespoke software: This category comprises all third party components that are developed or purchased/licensed under a commercial licensing agreement that contains also a support/maintenance agreement. This software is usually only available to developers after the procurement department bought them (thus, it is easy to track their use within a software company) and any support/maintenance obligations can (at least in principle) be pushed to the supplier.

- Freeware: This is the gratis software that you usually get as binary (or with a license that forbids modification). A good example of this category are device drivers that you get as a necessity to operate the hardware that you actually bought or any non-FLOSS software that you can download for free. As this software are gratis, they are usually not covered by a maintenance contract (no warranty), i.e., you neither have guaranties to get fixes nor future updates.

- Free/Libre Open Source Software: These are software components that are subject to an approved FLOSS license, which guarantees the access to the source code and also the right to modify the sources and distribute modified versions of it. This allows you to maintain the components yourself or to pay the vendor or a third-party provider to maintain a version for you – in case you need a different maintenance strategy as offered by the upstream authors. While this is not a strict requirement, they are usually also gratis available, e.g., as download from the Internet.

Freeware is ubiquitous, i.e., easy available to developers without triggering formal processes. Thus, it is the most problematic one as its use is usually hard to track, and it is usually hard to get fixes or updates in a timely manner (or any maintenance guarantees). FLOSS is also easily available – but it does not have the maintenance problem, as you could fix it yourself and there are also a plenty of companies offering support for FLOSS components. Thus, when you are tracking the use of FLOSS (as well as the use of proprietary third-party components) in your organization, proprietary and FLOSS components differ mainly in one aspect: FLOSS, by definition, provides you with the possibility to fix issues yourself (or ask an arbitrary third part to do it for you).

Let’s face the truth: any third party component (as any self-developed code) can contain vulnerabilities that need to be fixed during the lifecycle of the consuming applications. Thus, instead of asking which type of components is more secure (answer: neither, there is bad and good software in both camps), it is more important to control/plan the risk and effort associated with consuming third party components.

Thus, FLOSS just provides you one additional opportunity; fixing the issues yourself. Moreover, when doing research in software security, FLOSS has the additional advantage that data about software versions, vulnerabilities and fixes is available that can be used for validating research ideas. For example, we are researching methods

- for assessing if a specific version of a software component is actually vulnerable. This helps to avoid unnecessary updates, as public vulnerability databases such as NVD usually over-approximate the range of vulnerable versions,

- for estimating the maintenance effort (and risk) of third party component that are consumed by a larger product. The goal is to provide already in the design phase of an application an estimate how much effort on the long-run is caused by the selected third-party components.

We published already preliminary results [1] and we are expecting much more to come in the (near) future.

Of course, one would also like to precisely predict the risk (or the likelihood that vulnerabilities are detected in a specific third-party component during the maintenance period of the consuming applications). Sadly, our research shows that this is not (easily) possible and, again, is wrong question to ask.

Let’s get back to some pragmatic recommendations if you are using third-party components in general and FLOSS components in particular as part of your software development. As we cannot predict future vulnerabilities easily, we focus on strategies for controlling the risk and effort – which should be, anyway, the main focus of a good project manager.

Strategies For Controlling Security Risks

To control (minimize) the risk of third party components we recommend integrating the management of third-party components in your Secure Software Development Lifecycle right from the start and to obtain them from trustworthy sources (and, if you are in the lucky situation to be able to select a component from various components providing the necessary functionalities, we have some tips as well):

- Integration in your Secure Software Development Life Cycle:

- Maintain a detailed software inventory: To be able to fix vulnerable third-party components as soon as possible, a complete software inventory is a must. Recall the morning when Heartbleed was published – did you know which of the applications that you are offering to customers did use the vulnerable OpenSSL version? If you can determine the affected applications of your offering, you can immediately focus on fixing them, no time wasted in first finding out what to fix. This minimizes your risk as well as the risk of your customers.

- Actively monitor vulnerability databases: Not all third-party vendors in general and FLOSS projects in particular are actively notifying their customers individually about vulnerabilities or fixes. Thus, it is your obligation to actively monitor the public vulnerability databases daily for new vulnerabilities. This does not only include general databases such as NVD, you also need to monitor project specific vulnerability pages (not all projects issue CVE’s!). For your key third-party components, we also strongly recommend subscribing to their security mailing list (or other news channels).

- Assess project specific risk of third-party components: The potential risk of a vulnerability in a third party component depends on how you are using/consuming it. Thus, your threat modeling approach needs to cover the use of third-party components to assess the project specific risks and to develop project specific mitigation strategies.

- Obtaining components (or sources):

- Download from trustworthy sources: To avoid working with malicious components you should always ensure that components are obtained from a trustworthy source (i.e., the original upstream vendor or a trustworthy and reliable distributor/third-party provider). While it seems obvious, this boils down to downloading sources only via https (or other authentic channels) and checking the signatures or checksums. Finally, don’t forget to check the scripts (maven scripts, installations scripts, makefiles, etc.) that download dependencies during build or deployment – a surprisingly large number of them downloads dependencies via non-authenticated channels (neither do they check the validity of the download).

- Project Selection:

Prefer projects with private bug trackers: Being able to report security issues to a FLOSS project in privately allows you to discuss potential fixes with the community without putting your customers or all other customers of the FLOSS component at risk (e.g., by inadvertently publishing a 0-day).

Prefer projects with a mature (healthy) Secure Development Lifecycle: As nobody is immune from security vulnerabilities, it is important to select project that take security seriously. A good indicator is the maturity level of the Secure Software Development Lifecycle, e.g., by answering such as

- does the project document security fixes/patches (reduce the risk of “secret” security fixes)

- does the project document security guidelines

- does the project use security testing tools

Strategies For Controlling Effort

To control (minimize) the effort of third party components, again, the Secure Software Development Lifecycle is the most important part to look at – followed by the project selection.

- Secure Software Development Life Cycle

- Update early and often: Based on our analysis of API changes and published CVE’s for many Java-based FLOSS projects, we recommend updating early and often. Overall, this ensures the latest fixes as well as keep the upgrade effort low, as only a few APIs (if at all) have changed.

- Avoid own forks (collaborate with FLOSS community): Maintaining own forks for fixing security issues increases your effort and does not contribute back to the community. For both you and the community a collaboration is the much better model – and as you selected projects that support a private security bug tracker, you can work with the community without putting your customers at risk.

- Project selection

- Large user base: A large user base has at least two effects: first more security issues will be detected and reported. While, on the first sight, this seems counter-productive as also more patches will be released that you need to integrate, it results in a more secure product on the long term (and you want to avoid sever undetected vulnerabilities at all costs). Second, a larger user base results in more people that know the component and can provide support.

- Active development community: This is an easy one: a active development community will more likely result in timely and well documented security fixes and, moreover, more people that can help you to fix issues that you encounter.

- Technologies you are familiar with: This also seems to be obvious but still, this is a recommendation that often is ignored. By choosing technologies you are familiar with, the effort in taking over maintenance yourself – if necessary – is much lower. More importantly, familiarity with the component and its infrastructure also allows you to assess the severity of vulnerabilities in your actual usage scenarios. Lastly, if you choose components using technologies you are not familiar with, built up the necessary knowledge (give your developers time and resource to get familiar with the new technology).

- Compatible maintenance strategy/lifecycle: Your software has a certain maintenance lifecycle and so have the third-party components. If you are building software for larger enterprises, it is not unlikely that you are providing support for ten years and more – if your third-party vendors only provide support for one year, you are in an unlucky situation.

- Smaller (in terms of code size) and less complex might be better: Less code means often fewer vulnerabilities and a less complex implementations are easier to maintain. Thus, if you only need a UUID generator that can be implemented in 20 lines of code, you might prefer to implement it yourself or choose a dedicated project providing this functionality instead of using a complex and generic “utilities” framework that has a several hundred thousands lines of code.